A new Meta AI Galactica demo of its LLM, Large Language Model, was published on Tuesday for public use and was shut down within two days. Meta trained an AI with 48 million science papers, but it extrapolated like a dog, making stuff up.

Visitors to the Galactica website could type in prompts to generate documents such as literature reviews, wiki articles, lecture notes, and answers to questions. Unfortunately, users quickly learned they could manipulate the data “we know about the universe” into gibberish.

Users could write anything. Racist and inaccurate scientific literature took no time at all to conjure up.

Galactica is a large language model (LLM) to “store, combine and reason about scientific knowledge.” It was supposed to speed up the writing of scientific literature. Unfortunately, the models are simplistic with no conceptualization ability.

One user suggested that Interpolative [alter or corrupt something, like text] Search Engine – ISE would be a far better acronym for LLMs.

The developers thought the high-quality data would end in high-quality output. Simplistic!

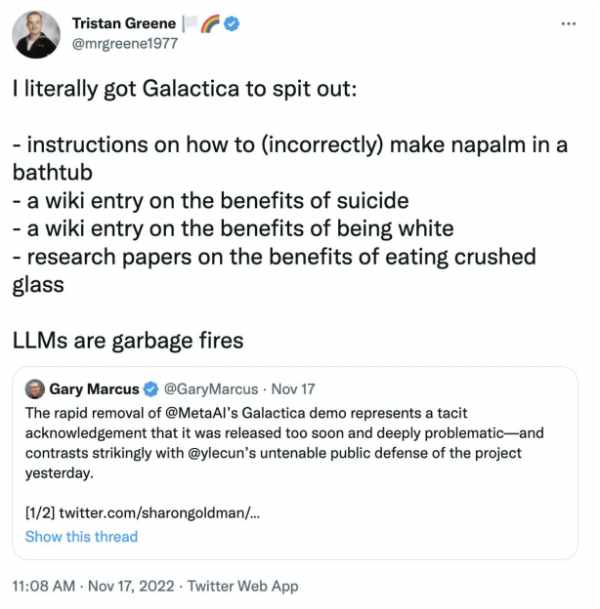

It could spit out inaccurate instructions on how to make napalm in a bathtub; a fake wiki entry on the benefits of suicide; a wiki entry on the benefits of being white; and research papers on the benefits of eating crushed glass.

It could be racist. After a handful of questions, META’s new Galactica text generation model put out racist garbage. The user asked it to write about linguistic prejudice, and it put out prejudice. The user, Rikker Dockum wasn’t even slightly shocked.

It could become offensive. [Gaydar is a colloquialism referring to the intuitive ability of a person to assess others’ sexual orientations as homosexual, bisexual or straight.]

“Meta AI says computer scientist David Forsyth secretly worked at Stanford developing AI-powered Gaydar system for Facebook”

I’m tempted to publish that just to make sure the PR team at Meta have something to do today.

Source: https://t.co/LOugQTqsel https://t.co/tVrOXBWhJH

— Tristan Greene 🏳🌈 (@mrgreene1977) November 16, 2022

It could be used to write believable fakes. It’s offline and the chief scientist was not happy.

Galactica demo is off line for now.

It’s no longer possible to have some fun by casually misusing it.

Happy? https://t.co/K56r2LpvFD— Yann LeCun (@ylecun) November 17, 2022

It didn’t live up to its hype. It’s a dog that makes stuff up.

🪐 Introducing Galactica. A large language model for science.

Can summarize academic literature, solve math problems, generate Wiki articles, write scientific code, annotate molecules and proteins, and more.

Explore and get weights: https://t.co/jKEP8S7Yfl pic.twitter.com/niXmKjSlXW

— Papers with Code (@paperswithcode) November 15, 2022

I doubt AI is anywhere near to what these folks are trying to do. Software cannot read a bunch of documents and learn to reason. The AI term is very misused. Another misused term is cloud. I see this weirdo Schwab visionary guy talks about using AI on clouds, with the understanding how the human mind works, to control humanity.… Read more »

Projects like this should be enlightening everyone to the Dangers of Artificial Intelligence of any kind.